Forget Funnels: Your next GTM Engine is Prompt-Led

How to cross the GenAI Divide and treat your prompts as strategic IP assets

The Gen AI Divide

The age of AI is no longer emerging — it is dominantly. Upon. Us.

As of late 2025, a staggering 88% of organizations mentioned they use AI in at least one business function. In marketing and sales, this has led to a widespread tactical use of LLMs for everything from summarizing meetings with Granola to generating ad copy with Claude, to full-blown workflows of intent-based outbound across Clay and Smartlead, or AI content generation with n8n, AirOps, and Webflow.

But here’s the paradox nobody is talking about. While everyone is using AI — or trying to — it’s very few who are really succeeding with it.

We’re in a state of “prompt anarchy” — a tactical free-for-all grab of prompt templates, libraries, and masterclasses (even we have some!) free that’s seemingly creating value, but can also be so overwhelming that is creating some analysis paralysis.

This AI and prompting craze is the reason why we’re witnessing a massive “GenAI Divide”. On one hand, they say it’s spreading brain rot making content marketers and operators lazier. On the other hand, it’s skewing the real GTM alpha on the teams with the larger, most structured, data sets — and the ambition to mine them.

The proof is in the numbers. A recent MIT report found that a shocking 95% of generative AI pilots at enterprise companies are failing to make it to production. This represents a massive waste of resources and a catastrophic failure to capture AI value.

This is compounded by two other critical business risks:

The infrastructure gap: While 77% of executives feel urgent pressure to adopt AI, only 25% believe their IT infrastructure is actually ready for enterprise-wide scaling. It’s like trying to run a Tesla on a lawnmower engine. This shows not just that we’re still early, but that to look at real innovation in AI usage, we’re better off turning to the smaller, more nimble players that are building these AI workflows software — that’s where the true AI alpha lies. More on this below.

The prompt management gap: Alex Halliday, founder of AirOps, mentioned: “Your prompts are your IP — keep them optimized and manageable like an engineer would”. But instead, most GTM teams treat prompts like disposable scripts instead of strategic assets. This leads to inconsistent quality and zero reusability — a far cry from the industry benchmark of 40-60% prompt reuse that high-performing teams achieve.

So, in an environment where 95% of initiatives fail, how can GTM leaders cross the GenAI Divide and join the top 10% mining the real GTM Alpha? How do you transform scattered, failing pilots into a cohesive, strategic, and scalable growth engine that drives measurable business results?

There’s not a one-size-fits-all solution. BUT, we believe it starts with managing your prompts better — like they were actual IP to nurture, polish, and constantly refine.

And doing so in a system fashion — with what we call a Prompt-Led Engine (PLE).

This requires treating prompts with the same rigor as other critical GTM assets like ad campaigns or sales playbooks. It’s what we see top operators doing at the GTM Engineer School, where students from the likes of Ahrefs, Google, Slack, and more learn how to build automated systems across AI GTM like Clay, n8n, Cargo, Octave, and AirOps to automate 10-hour manual processes and reduce manual effort by 80%.

In this post, we’ll walk you through our systematic approach to designing, testing, managing, and deploying prompts as core components of your automated GTM strategy.

And we’ll give you examples of who’s doing it very well, so you know who to look up to for what good looks like!

Let’s dive in.

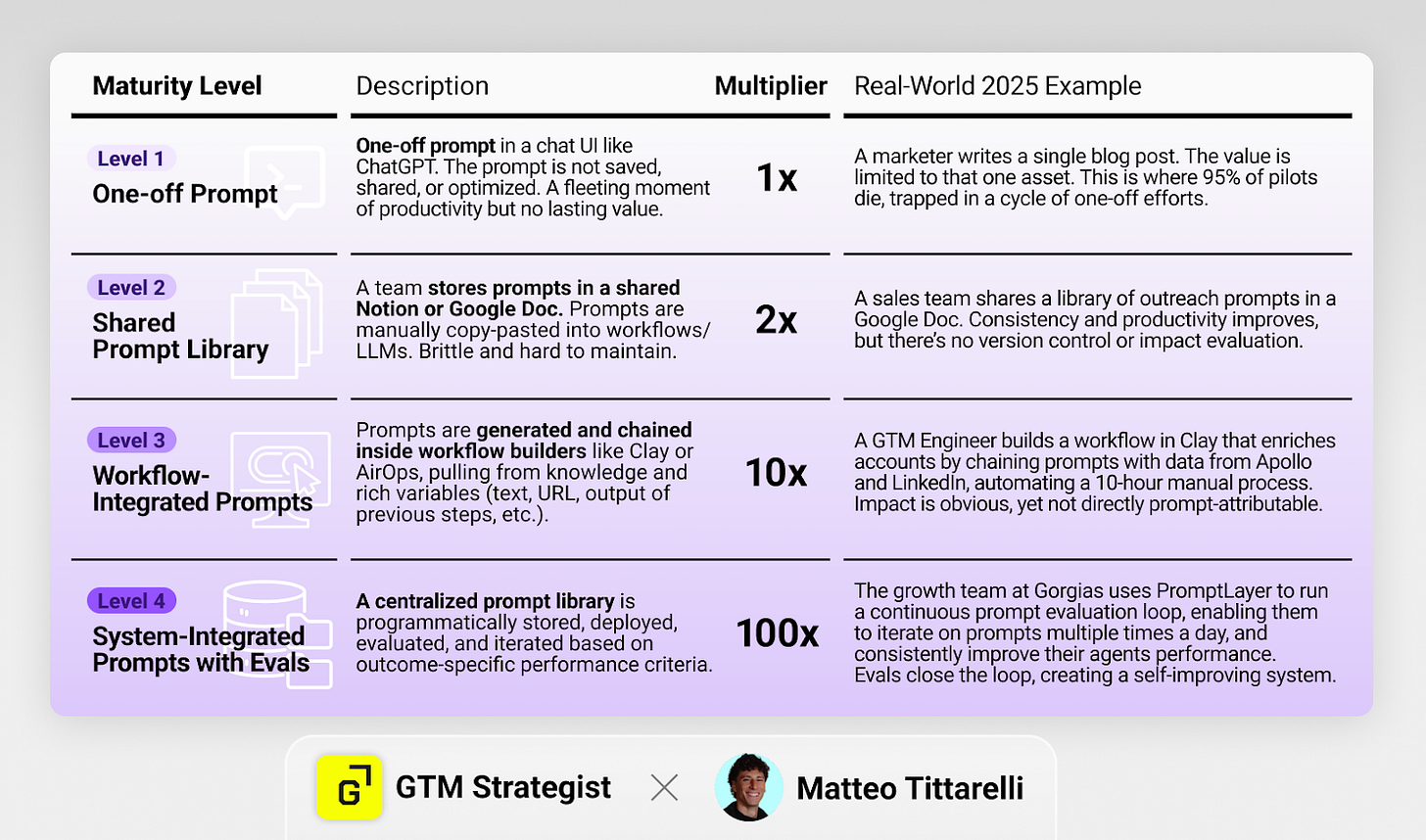

1. The 100x value multiplier of a Prompt-Led Engine

The journey from prompt anarchy to a Prompt-Led Engine isn’t just about getting organized — it’s about unlocking exponential value.

It’s a strategic shift from treating prompts as disposable commands to architecting them as durable, scalable assets.

As Brendan Short of The Signal notes, the future of GTM tech is about selling automated labor, not just software”. According to Brendan,

“The GTM company that will break out beyond a $1B valuation (and into $100B territory) will be the one that builds something that looks like labor in the form of swiping a credit card (instead of sourcing+ramping+managing people, equipped with software).”

However, as we’ve learned in our careers, you can’t “boil the ocean in one pot” — you have to think in phases, in maturity levels like Crawl, Walk, Run. This is how you build that future, one level of maturity at a time.

And here’s how you can apply this thinking to your prompt-led engine.

Level 1: The Individual Prompt

An operator uses a one-off prompt to trigger a task in a chat UI like ChatGPT or Claude. Nothing new here.

Example: A marketer uses a prompt to write a single blog post. The value is limited to that one asset. This is where 95% of AI pilots die, trapped in a cycle of one-off efforts.

You might use a specific prompt generator to structure your prompt but the prompt is not saved, shared, or optimized. It’s a dead end, offering a fleeting moment of productivity but no lasting value.

Level 2: Shared, Manual Prompt Library

Want to know all the details of the other levels, plus real-life applications of this framework from real companies? Head over to read the full article.

Hey, great read as always. So realy insightful about data quality!